While artificial intelligence (AI) has transformed productivity and employment around the world, many believe it still poses a threat especially when it comes to warfare where AI controlled drones can be used. In a recent development, reports suggest that the Pentagon has been pushing towards allowing these AI controlled drones to pick and eliminate human targets. Let’s take a look at the reports and find out if killer robots are inevitable in the future.

Exploring the idea of lethal autonomous weapons

Drones controlled by artificial intelligence might be able to make autonomous decisions about whether to target and kill humans on the battlefield. While their decision-making abilities are still primitive, such AI controlled drones are now closer to becoming reality than ever before.

The deployment of AI-controlled drones that can make autonomous decisions about whether to kill human targets is moving closer to reality, The New York Times reports.

Lethal autonomous weapons, that can select targets using AI, are being developed by countries including the US,… pic.twitter.com/fVjTdAP2Mo

— Clash Report (@clashreport) November 23, 2023

This recent development allegedly involves global powerhouse nations such as the US, China, Russia and Israel, who are competing to possess the most advanced AI-related weapons in their arsenal. This has raised ethical and security concerns all over the world, even among their allies.

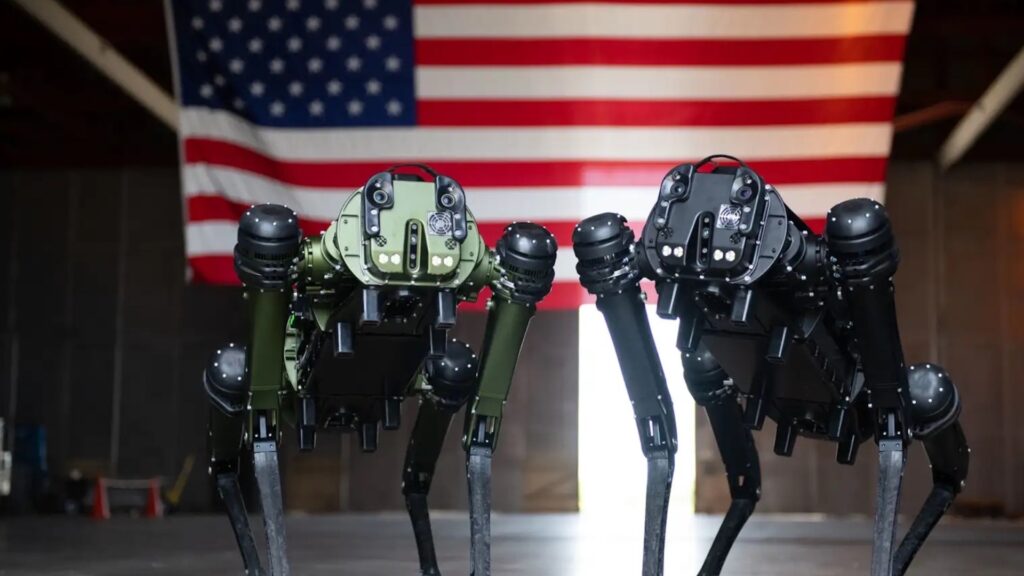

Using these colloquially named ‘killer robots’ will allow them to select human targets using artificial intelligence and allow technology to make life-and-death decisions on the battlefield without any human input. While this increases military efficiency, this removes emotion and humanity out of the equation.

Will the Pentagon let AI controlled drones kill human targets?

While AI controlled drones have been discussed in theory for a long time, the United States Department of Defense, headquartered at the Pentagon, is reportedly working towards deploying them with the ability to make decisions on their own.

According to the New York Times, the Pentagon believes that the technology would give them a strategic edge as they could outweigh the numerical advantages of adversaries like China’s People’s Liberation Army.

HUNTER-KILLER: Ukraine's ‘DroneHunter’ is an AI controlled anti-UAV platform detects and captures intruder drones outside designated geo-fenced zones. AI augmented targeting and flight controls allow fully autonomous operation. pic.twitter.com/0TVer7xmSk

— Chuck Pfarrer | Indications & Warnings | (@ChuckPfarrer) November 22, 2023

Creating a mass of AI-enabled drones would make it challenging to plan against and harder to defeat. Some officials from the US Air Force claim that AI drones are the need of the hour, provided they can make lethal decisions while still under human supervision.

According to The New Scientist, some AI controlled drones were already observed in usage during the Russia-Ukraine conflict. However, there is no information regarding the number of human casualties caused by any lethal autonomous weapons.

The problem with using killer robots

The main question with using AI controlled drones is: can we trust artificial intelligence to make such crucial decisions on the battlefield? Moreover, this creates an ethical conundrum of whether a strategic advantage is worth delegating life-and-death tasks to AI.

The rise of AI in the past few years has left governments baffled as there is no existing regulation to control their usage. Now that the Pentagon is reportedly pushing for killer robots, several governments are working with the United Nations to restrict the use of these AI-based weapons.

However, the global superpowers might just be immune to these requests from smaller countries since this is a complex security issue. Even common people have been raising their voice against the unregulated growth of AI and its use in warfare, quoting examples like Skynet from Terminator on social media platforms.